Building a sustainable AI-powered IAM program

With decades of experience in cybersecurity, one of the most valuable lessons I’ve learned is how to recognize true inflection points - moments where the technology, the threat, and the business imperative align to open up a new path forward. I believe we are in one of those moments with AI and its application to Identity and Access Management (IAM).

For years, many of us have treated IAM as a necessary, but often manual and reactive, part of our security programs. And here’s the good news: we can get immediate, practical value by applying AI to our existing IAM challenges - today. In a separate post, I’ve outlined 5 pragmatic use cases where your teams can start leveraging AI right now to get quick wins.

But this post is about the bigger picture. It’s about moving beyond quick wins to build a sustainable, strategic program. Here’s an simple example framework for how we might thoughtfully approach this challenge.

The "Why": The business case for AI in IAM

Before we dive into the “how,” it’s critical to articulate the “why.” The case for investing in AI for IAM is not just about better security; it’s about better business. Managing today’s IAM landscape manually is like trying to direct traffic in a modern metropolis using hand-written ledgers and messengers on foot. AI is our upgrade to a real-time, city-wide traffic control system with predictive analytics.

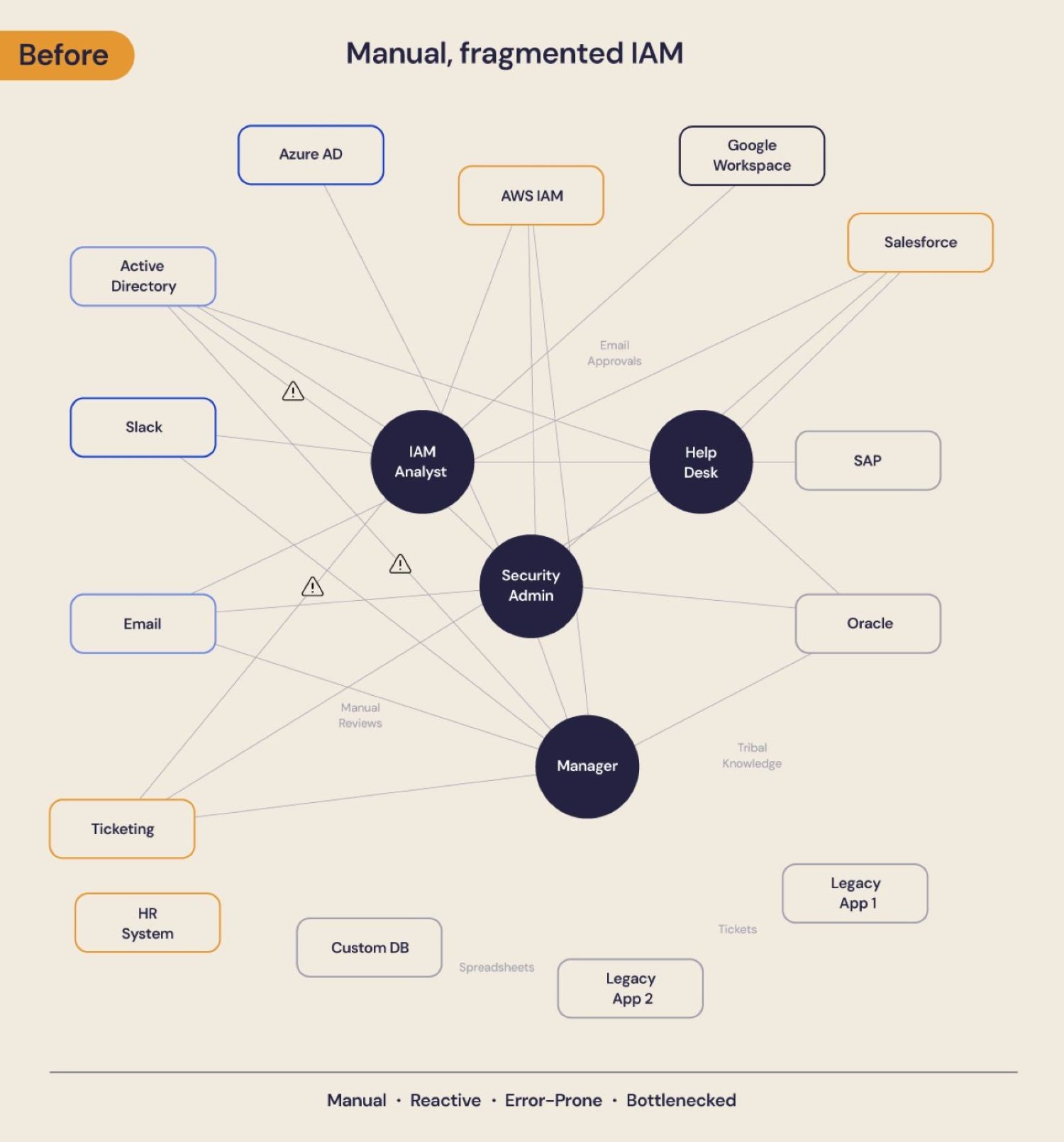

The diagram below illustrates this transformation. Below, we see the current state in many organizations: a tangled, manual, and reactive process where a variety of teams—from IAM analysts and security admins to the help desk and even business managers - are all caught in the critical path, creating a distributed and chaotic bottleneck for every access request and review.

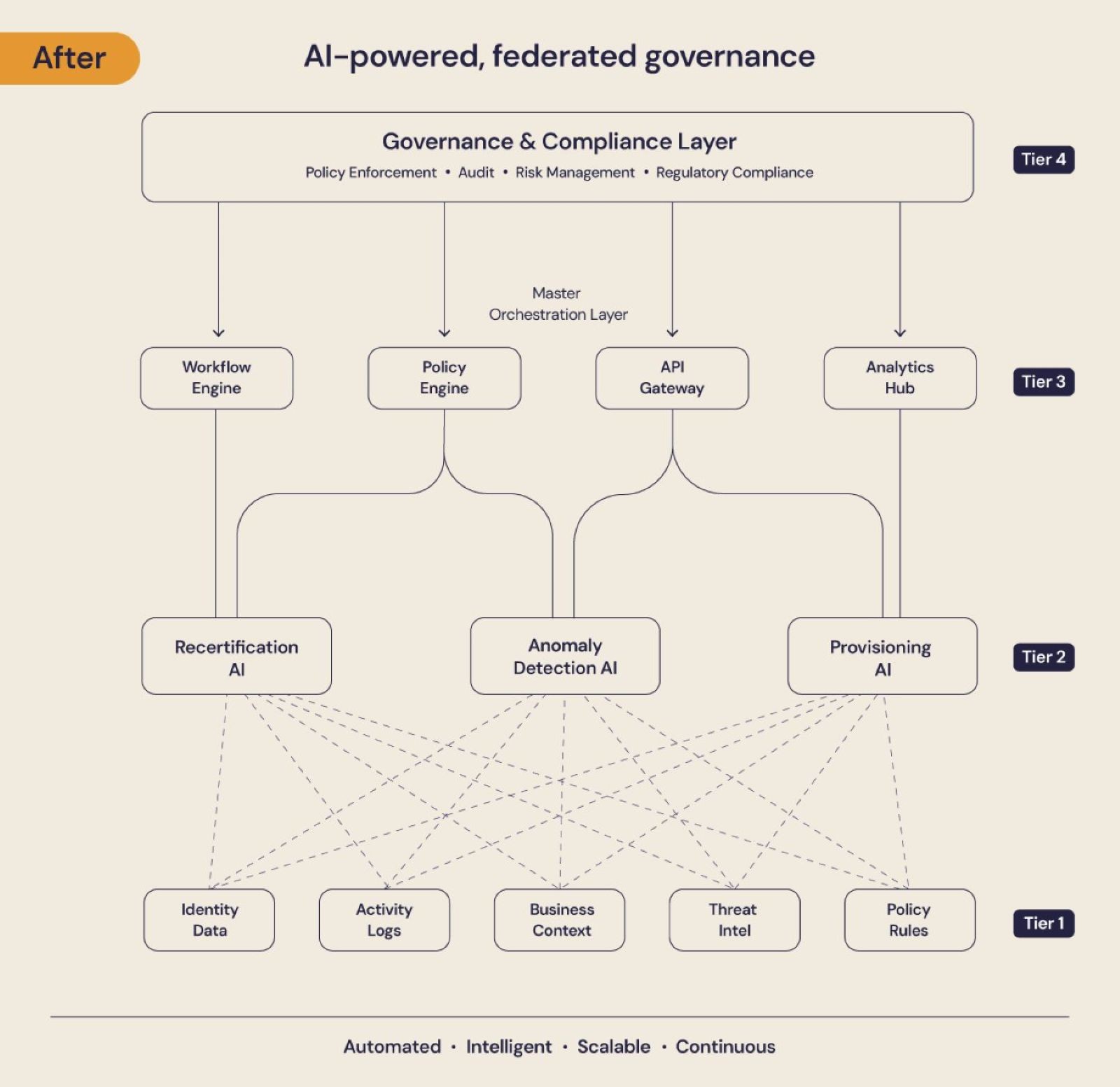

Here, we see the future state: a scalable, intelligent, and federated architecture that brings order to this chaos.

This future state is not a single, monolithic AI brain. Instead, it is a flexible, 4-tier federated model:

Tier 1: Data Sources

At the foundation, we have the raw data feeds that fuel the system: identity data from HR systems, activity logs from applications, business context from project management tools, threat intelligence feeds, and the organization's own policy rules. This is the fuel for the AI engines.

Tier 2:

Specialized AI Engines. This is where the magic happens. Instead of one giant AI, we have multiple, purpose-built AI engines, each specializing in a specific IAM task.

For example:

A Recertification AI that is an expert at analyzing access patterns and recommending which entitlements should be kept or removed.

An Anomaly Detection AI that is an expert at identifying suspicious behavior that deviates from a user's normal baseline.

A Provisioning AI that is an expert at understanding roles and automating the user lifecycle from onboarding to offboarding.

Tier 3: Master Orchestration Layer. This is the operational control plane, and it is not a single solution. Instead, it is an assembled set of integrated technologies working together: workflow engines, policy engines, API gateways, and analytics hubs. This modular approach allows organizations to leverage existing investments and add new capabilities as needed. The orchestration layer takes the recommendations from the specialized AI engines and coordinates the execution across the enterprise.

Tier 4: Governance & Compliance Layer. At the strategic top sits the governance and compliance layer. This is where enterprise-wide policy enforcement, audit requirements, risk management frameworks, and regulatory compliance mandates are defined and enforced. This layer ensures that all AI-driven decisions and automated actions align with the organization's risk appetite and compliance obligations.

This federated model is critical because it is scalable, flexible, and resilient. We can add new specialized AI engines as new use cases emerge, swap out orchestration components as technology evolves, and adjust governance policies as regulations change—all without having to re-architect the entire system. This is a blueprint for a sustainable, AI-powered IAM program.

This transformation addresses the 4 key business drivers for AI in IAM:

Managing Complexity: The federated model breaks down the complexity of IAM into manageable, specialized domains. Consider a single marketing campaign: it involves a new contractor (identity), access to a cloud storage bucket (entitlement), an API key for a new martech tool (machine identity), and customer data access. That’s four different identity types with dozens of permissions for a single, temporary project. Now multiply that by 100 projects across the enterprise.

Increasing Velocity: Automation at every layer removes manual bottlenecks and accelerates business processes.

Reducing Risk: The Anomaly Detection AI and other specialized engines provide continuous, real-time risk monitoring.

Improving Efficiency: The Provisioning and Recertification AIs automate the most time-consuming manual tasks, freeing up our skilled teams for more strategic work.

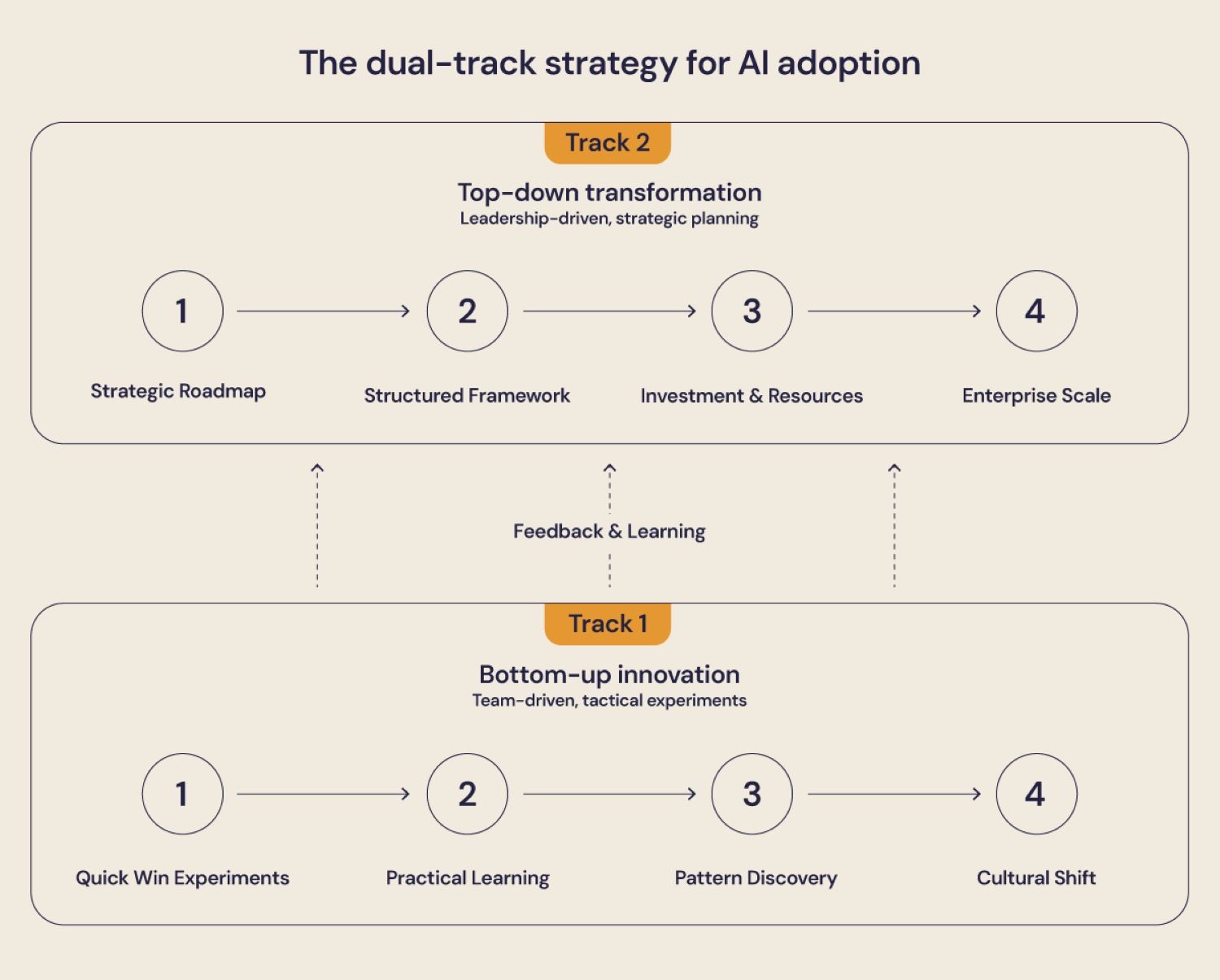

The "How": The dual-track strategy for adoption

Adopting AI in IAM is not a single project; it’s a program that requires a dual-track strategy. This strategy is like building a modern skyscraper. As identity security experts note, identity strategies must adapt to address the complexities of modern threats and the opportunities AI presents.

Track 1 (Bottom-up innovation): This is the crew on the ground testing the soil, finding the best materials, and learning the new tools. This is about empowering our teams to start experimenting with generative AI today. It’s about giving them the tools and the permission to find quick wins, learn what works, and build a data-driven mindset. This track is where we discover the practical realities of our data and processes.

Track 2 (Top-down transformation) is the master architect’s blueprint for the entire structure, ensuring that every floor works together to create a stable and functional building. This is the leadership-driven, strategic track. It’s about creating the roadmap, securing the investment, and building the foundational capabilities that will allow us to scale the successes from Track 1 across the enterprise.

The key is that these two tracks run in parallel and continuously inform each other. The learnings from the bottom-up experiments provide the data and the business case for the top-down strategic investments.

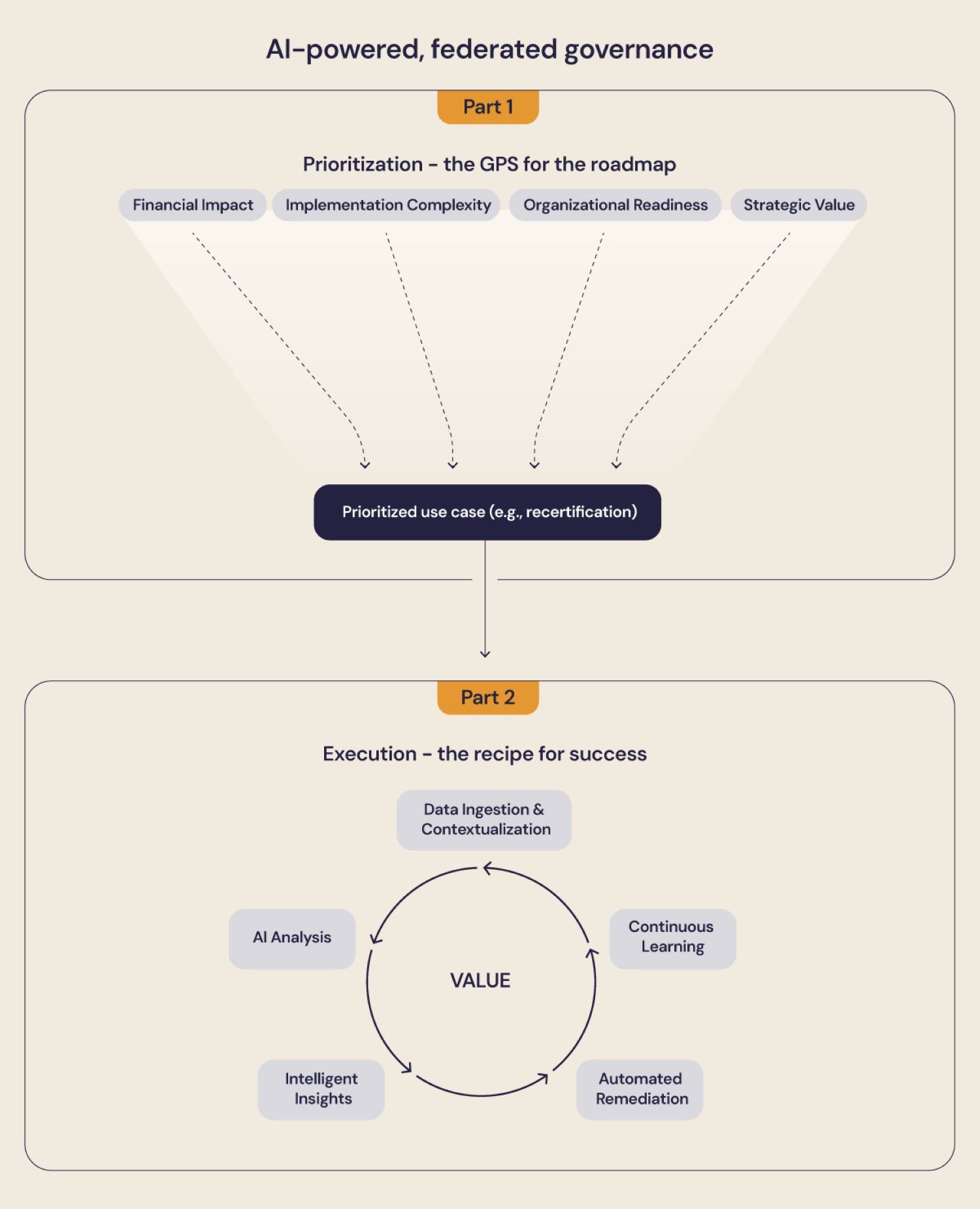

The "What": A framework for strategic execution

To execute the top-down track, we need a structured framework. This framework has two parts: a model for prioritization and a playbook for execution.

Prioritization: A 4-Pillar Model. How do we choose where to start? We use a 4-Pillar Model to evaluate potential use cases. Think of this as the GPS for our roadmap, helping us choose the best route. We evaluate each use case against:

Financial Impact ($): What is the potential ROI?

Implementation Complexity (Technical): How hard is it to build?

Organizational Readiness (People): Can we succeed culturally and politically?

Strategic Value: Does this build momentum and foundational capabilities?

Execution: The Universal AI Value Chain. Once we’ve chosen a use case, how do we execute it? We use a 5-step iterative playbook. Think of this as the recipe we follow to ensure a successful outcome:

Data Ingestion & Contextualization: Gather the necessary data.

AI Analysis: Analyze the data to find insights.

Intelligent Insights: Translate the insights into actionable recommendations.

Automated Remediation: Automate the response to the recommendations.

Continuous Learning: Feed the outcomes back into the system to make it smarter.

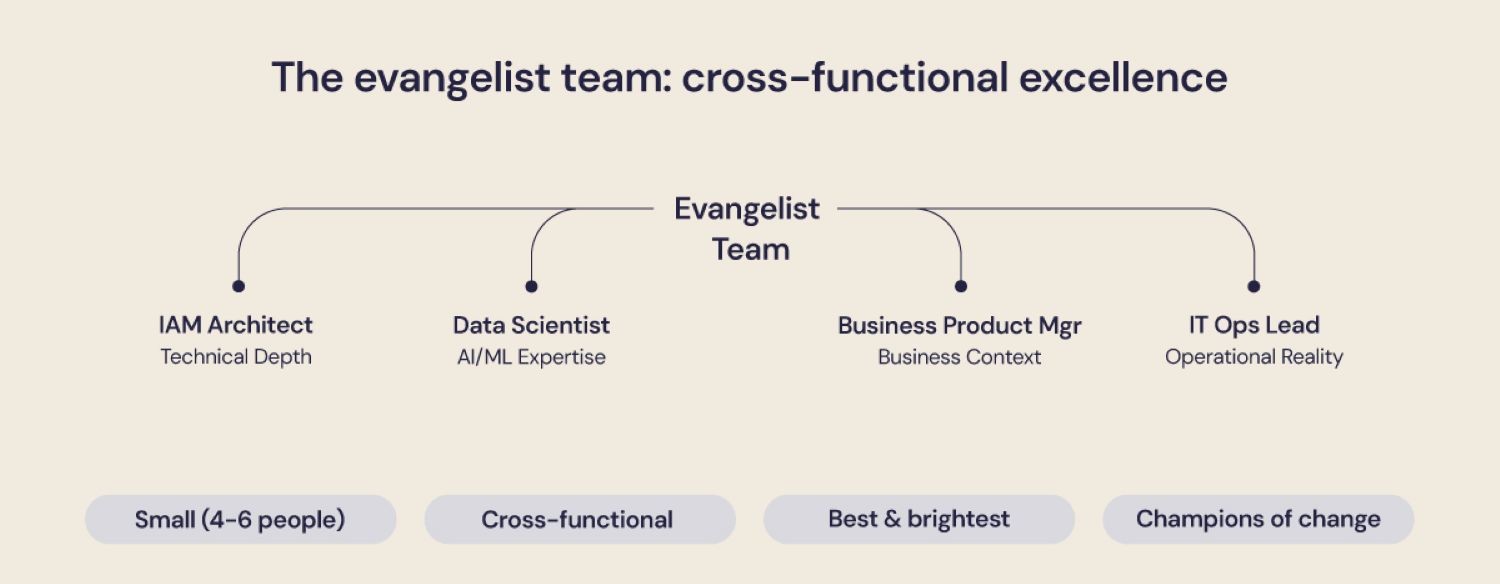

The "Who": Building the team and culture

Technology is only part of the equation. Success requires a deliberate focus on people and culture. The Evangelist Team is our internal ‘Startup Team.’ They are a small, agile, and entrepreneurial group tasked with building a new, scalable model from the ground up, proving its value, and then creating the playbook for the rest of the organization to follow.

The Evangelist Team

This is a small, cross-functional team of some of your best and brightest from security, IT, and the business. They are the champions who will drive the initial pilots and evangelize the value of AI across the organization. Our first evangelist team might consist of one senior IAM architect, one data scientist, a product manager from a key business unit (like marketing or sales), and an IT operations lead. This group has the technical depth, business context, and operational reality to ensure the first pilot is a success.

Executive Sponsorship

The startup team cannot succeed in isolation. They need active, visible support from senior leadership—ideally a C-level executive sponsor who can remove organizational barriers, secure resources, and champion the initiative at the highest levels. This sponsor is not a passive figurehead; they are an active advocate who attends key meetings, communicates the strategic importance to the board and leadership team, and ensures that the startup team has the political capital to drive change across organizational boundaries. Without this top-down air cover, even the best startup team will struggle to overcome the inevitable resistance and competing priorities.

Skills Development

We need to invest in upskilling our existing workforce. Our IAM professionals need to become comfortable working with data and AI, and our data scientists need to understand the nuances of IAM.

Change Management

We need to bring our business stakeholders and end-users along on the journey. This means communicating the value of AI, setting realistic expectations, and celebrating the wins.

Sidebar: An actionable guide to the 4-pillar prioritization model

To move from theory to practice, you need a structured way to evaluate and score potential AI use cases. Here is a simple model to help you and your teams get clarity and improve the velocity of your decision-making.

Step 1: Score Each Use Case (1-5) Against These Key Questions

Pillar 1: Financial Impact ($)

Risk Reduction: How directly does this reduce the risk of a costly breach? (1 = Indirectly / Low Impact; 5 = Very Directly / High Impact)

Cost Savings: What is the estimated annual cost savings from automating manual tasks? (1 = Very Low, <$50k/yr; 5 = Very High, >$500k/yr)

Time to Value: How quickly can we demonstrate a measurable financial return? (1 = Very Slow, >18 months; 5 = Very Fast, <6 months)

Pillar 2: Implementation Complexity (Technical)

Data Availability: Is the required data readily available, clean, and accessible? (1 = No, data is siloed/messy; 5 = Yes, fully available and clean)

Integration Effort: What is the level of integration effort required? (1 = Very High, requires custom development; 5 = Very Low, simple API)

Skill Alignment: Do we have the necessary technical skills in-house? (1 = No, requires external hiring; 5 = Yes, fully staffed)

Pillar 3: Organizational Readiness (People)

Business Sponsor: Is there a clear and influential business sponsor? (1 = No sponsor / not influential; 5 = Yes, a strong, vocal sponsor)

Change Management: How significant is the change management effort for end-users? (1 = Very High, major workflow changes for all; 5 = Very Low, transparent to users)

Stakeholder Alignment: Is there strong alignment between key stakeholder groups? (1 = Very Low Alignment / Conflicting priorities; 5 = Very Strong Alignment)

Pillar 4: Strategic Value

Momentum: How visible and impactful will a win be in building credibility? (1 = Low visibility / Internal IT win only; 5 = Very High Impact / C-suite visibility)

Foundational Capability: Does this build a reusable capability that accelerates future projects? (1 = No, it is a one-off solution; 5 = Yes, highly foundational)

Risk Alignment: How closely does this align with our top enterprise risks or objectives? (1 = Not aligned; 5 = Very Strong Alignment)

Detailed Scoring Rubric

To ensure consistent scoring, use the following detailed rubric:

Category | Score 1 (Low) | Score 2 | Score 3 | Score 4 | Score 5 (High) |

Pillar 1 Financial Impact ($) | |||||

Risk Reduction | Indirectly / Low Impact | Some indirect link | Direct link, but low impact | Direct link, moderate impact | Very Directly / High Impact |

Cost Savings | <$50k/yr | $50k - $100k/yr | $100k - $250k/yr | $250k - $500k/yr | >$500k/yr |

Time to Value | >18 months | 12-18 months | 9-12 months | 6-9 months | <6 months |

Pillar 2 Implementa-tion Complexity | |||||

Data Availability | Data is siloed/messy | Requires significant cleaning | Needs some work | Mostly available & clean | Fully available & clean |

Integration Effort | Very High, custom dev | High, multiple integrations | Moderate, some custom work | Low, mostly configuration | Very Low, simple API |

Skill Alignment | Requires external hiring | Requires significant training | Some skills exist, need help | Most skills are in-house | Fully staffed in-house |

Pillar 3 Organizational Readiness | |||||

Business Sponsor | No sponsor | Sponsor exists, not influential | Sponsor is influential, not engaged | Strong sponsor, some conflicts | Strong, vocal sponsor |

Change Management | Very High, impacts all | High, impacts many users | Moderate, impacts some users | Low, impacts a small group | Very Low, transparent |

Stakeholder Alignment | Very Low / Conflicting | Some alignment, major conflicts | Moderate alignment, some conflicts | Good alignment, minor issues | Very Strong Alignment |

Pillar 4 Strategic Value | |||||

Momentum | Low visibility (IT only) | Visible to IT leadership | Visible to some business units | High visibility to business leaders | C-suite visibility |

Foundational Capability | One-off solution | Minor reusability | Some reusable components | Mostly reusable | Highly foundational |

Risk Alignment | Not aligned | Loosely aligned | Moderately aligned | Strongly aligned | Very Strongly Aligned |

Step 2: Calculate the weighted score

Not all pillars are created equal. For most organizations starting out, readiness and strategic value are more important than pure financial impact or technical elegance.

Pillar | Average Score (from Step 1) | Weight | Pillar Score |

Organizational Readiness | (Score / 3) | 35% | (Avg Score * 0.35) |

Strategic Value | (Score / 3) | 30% | (Avg Score * 0.30) |

Financial Impact | (Score / 3) | 20% | (Avg Score * 0.20) |

Implementation Complexity | (Score / 3) | 15% | (Avg Score * 0.15) |

Total Prioritization Score | 100% | Sum of Pillar Scores |

Erik is an cybersecurity executive and advisor with over 20 years of experience leading cloud security, identity, and enterprise risk transformation. Formerly SVP – Cloud Security & Architecture at M&T Bank, he has also held leadership roles at GitHub, Veracode, and ACV Auctions. Erik advises emerging cybersecurity innovators, including Cyera, Legit Security, Cyberstarts, and Permiso Security, and through his firm, Pragmatic Strategies, helps organizations turn complex security challenges into actionable strategy and measurable results.