Identity as a Decision Accelerator: How Does AI Transform Security Decisions?

I've lost count of the number of times I've been in rooms - physical or virtual - with dozens of our smartest people trying to answer what should be a straightforward question: what's the risk here, really?

Those moments are where we earn our reputation in identity security. Not by finding problems, but by how quickly and clearly we can help a decision move forward.

Most of the time, this isn't happening during a live incident. It's happening during normal business change - building new capabilities, re-architecting applications, modernizing platforms, integrating acquisitions, or trying to move faster with data and technology. Incidents are rarer. Change is constant. And this is where the real friction between cybersecurity and the business shows up.

We pull in a dozen people - analysts, architects, product owners, their managers. We reconstruct history from memory. We trade screenshots, spreadsheets, log snippets, and gut instincts. We rely on tribal knowledge and crossed fingers, hoping the right data surfaces in conversation so we can see the situation clearly enough to make a decision.

And even then, we're often still unsure.

This isn't a talent problem. It's not a commitment problem.

It's because modern identity risk has outpaced our ability to reason about it using human-only processes.

Over time, that gap has shaped how we show up. We've long been seen as the department of "no" - or at least the department of friction - not because we want to block progress, but when identity risk is opaque, the safest move is to stop rather than ask, "how do we make this work?"

That's not a sustainable role for us.

And if we're painful to involve during everyday change, teams don't wait for an incident to prove the point—they already work around us.

If cybersecurity is going to be a true partner in business growth, evolution, and decision-making, we need a different operating model - one where we can help the business see identity risk clearly enough to move forward with confidence.

This is where identity security and AI transformation matter.

Why traditional identity governance fails: The cost of opaque risk

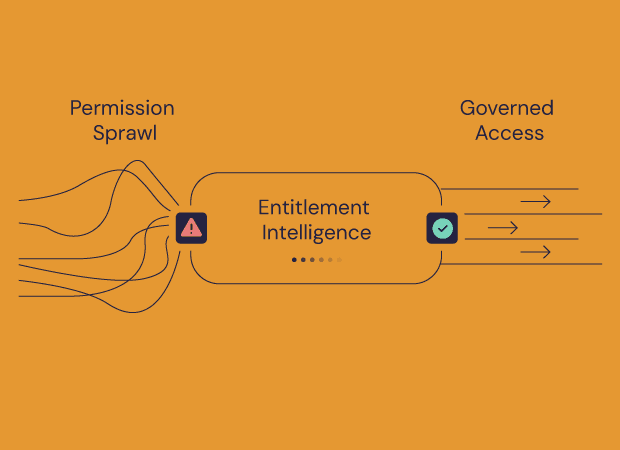

Anyone who's run IAM at scale knows the math. Thousands of applications, tens of thousands of users, millions of entitlements - identity data scattered across more systems than anyone can reliably keep track of.

I've been in organizations where we had dozens of systems holding some version of "identity truth," and still couldn't answer a basic question without pulling people into a room to sort it out.

But the bigger cost isn't operational. It's organizational.

When we can't clearly articulate identity risk, we struggle to participate meaningfully in design conversations.

Requirements show up late. Reviews feel subjective. Decisions stall. Trust erodes.

Every delayed access decision, every rubber-stamped certification, every last-minute security objection reinforces the perception that cybersecurity slows things down - not because it's wrong, but because it can't move at the speed of change.

That's how cyber becomes a bottleneck instead of a partner.

Gartner found that 68% of security teams cite "inability to quickly assess identity risk" as a primary cause of delayed business initiatives. When you can't answer basic questions about who has access and why, the only safe answer becomes "let me get back to you"—and by then, the business has often moved on.

For years, many of us have talked about how valuable it would be to have a single, coherent view of who has access to what, across every system, updated in real time.

It wasn't a neatly defined goal so much as a shared aspiration - something we all knew would make our lives easier if it were possible. We moved toward it directionally: new platforms, better integrations, improved role models. But at enterprise scale, it never quite came together.

What we were really reaching for wasn't a pane of glass. It was decision clarity.

We wanted to be able to answer, quickly and credibly:

Who has access to this?

Why do they have it?

How is it actually being used?

What's the real risk if we change it?

And without that clarity, the costs compound - not just operationally, but organizationally.

How AI transforms identity risk assessment (not a silver bullet)

I want to be clear about something up front: I don't think AI is ready to do this perfectly or at scale - and most organizations aren't ready either.

If you've spent any time trying to roll out any new technology inside a large enterprise, you already know how this goes. Data is messy. Context lives in people's heads. Access models are inconsistent.

The idea that you can flip a switch and suddenly reason about identity risk across the entire enterprise is fantasy.

But that doesn't mean AI isn't useful.

Where AI is finally ready to help is in very specific, very practical moments - the moments where we usually end up pulling a dozen people into a room to reconstruct what's going on. It can aggregate identity data, usage signals, and peer context quickly enough to reduce ambiguity and give us a better starting point for a decision.

Forrester found that organizations with mature identity intelligence capabilities - those that can rapidly assess who has access, why, and the associated risk - reduce security decision cycles by an average of 60% while simultaneously improving confidence in those decisions.

As I outlined in 5 Quick Wins for AI in IAM, AI's value comes from processing massive amounts of unstructured identity data and identifying patterns that would take humans months to discover.

The intelligent thread approach to identity security

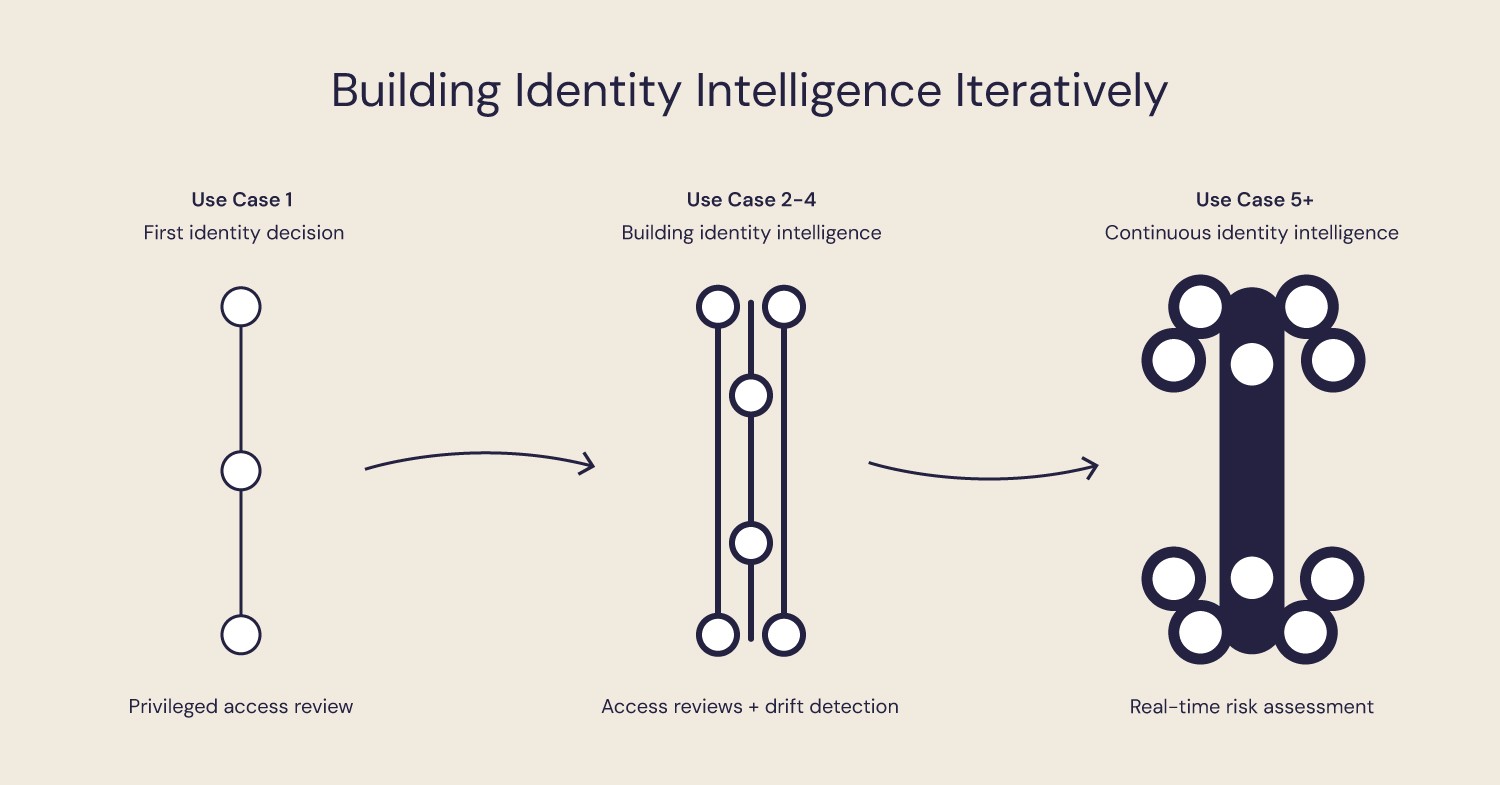

A metaphor I keep coming back to is an intelligent thread. Not a fabric. Not a platform. A thread.

At first, it's thin. It might only connect a few systems or support one painful decision—standing privileged access, access reviews everyone knows are broken, or entitlements that have quietly drifted over time. But each time we apply AI to a real identity problem, we strengthen that thread. We preserve context. We make the next decision a little easier than the last one.

This isn't a big-bang transformation. And it shouldn't be.

The way forward is the same way I've seen security capabilities actually get built: iteratively. We pick a use case where the pain is real and the data is reachable. We apply AI to support a specific decision. We learn where it helps, where it falls down, and what our organization is actually ready for.

Then we do it again.

Over time, those pockets of capability start to connect. The intelligent thread gets stronger. And we stop starting from zero every time the business asks, "Can we do this?"

This iterative capability-building approach aligns with the sustainable AI-powered IAM program framework I described earlier - focusing on building reusable identity intelligence rather than one-off solutions.

Privileged access review with AI-powered identity intelligence

Imagine this isn't a demo - it's a real request from the business.

"We're concerned about standing privileged access in production. We want it reduced—but we can't afford to break anything."

This is where I've seen us slow things down. Not because the concern isn't valid, but because answering it usually means weeks of analysis, tribal knowledge, and uncomfortable judgment calls. By the time there's an answer, the business has often lost patience.

In an AI-augmented identity model, the conversation starts with evidence instead of policy.

An analyst asks: "Show me users with elevated database access, how that compares to their peers, and how that access is actually being used."

One case that surfaces is a finance analyst with broad database permissions across several production systems. Compared to peers in the same role, the access stands out. Usage data shows most privileged permissions haven't been exercised in months. The databases themselves fall under SOX scope.

The predictable objection follows: "She doesn't use it often - but quarter-end is different."

Instead of arguing, the team checks usage around prior closes. The recommendation shifts slightly: right-size standing access to peer baselines, and put a short-lived, well-scoped elevation path in place for close periods if it's needed.

This is what the intelligent thread looks like in practice.

The decision isn't framed as "security says no."

It's "here's what we know, here's the risk, and here's how we move forward."

That's the difference between slowing change and enabling it responsibly.

This evidence-based approach requires what I call the "Policy Mesh," or structured correlation between business policies and technical implementations that makes identity risk visible and actionable.

How to implement AI-powered identity decision making

This isn't about buying an AI tool or rolling out a grand architecture.

It's about building the capability to reason about identity risk early enough to enable change instead of reacting to it. One use case at a time. One decision at a time. Each iteration builds muscle, trust, and institutional memory.

Platforms like Opti's entitlement intelligence solution enable this by continuously correlating identity data, usage patterns, and policy requirements—turning one-off analyses into operational capabilities.

Start with these high-value identity use cases:

Standing privileged access reduction - Where excessive permissions create obvious risk

Access certification overhaul - Replace rubber-stamping with evidence-based reviews

Entitlement drift detection - Surface permissions that have quietly accumulated over time

Peer baseline analysis - Compare individual access against role-appropriate patterns

Just-in-time access enablement - Reduce standing permissions through temporary elevation

Each successful use case strengthens the thread. Over time, we become easier to work with—not because standards are lower, but because clarity is higher.

From "Department of No" to strategic enabler

For most of my career, we've been rewarded for finding problems, not for helping solve them. That instinct keeps organizations safe - but it also shapes how others experience working with us.

The opportunity in front of us isn't about AI tools or identity platforms. It's about whether we're willing to change how we participate in decisions. Whether we can move from reconstructing identity risk after the fact to contributing clarity while change is still possible.

AI-augmented identity won't fix that overnight. But used deliberately, one use case at a time, it can start to shift the relationship - less friction, better conversations, and decisions made with more confidence.

That's not a technical transformation.

It's a leadership choice.

And it's one we can't afford to avoid much longer.

Erik is an cybersecurity executive and advisor with over 20 years of experience leading cloud security, identity, and enterprise risk transformation. Formerly SVP – Cloud Security & Architecture at M&T Bank, he has also held leadership roles at GitHub, Veracode, and ACV Auctions. Erik advises emerging cybersecurity innovators, including Cyera, Legit Security, Cyberstarts, and Permiso Security, and through his firm, Pragmatic Strategies, helps organizations turn complex security challenges into actionable strategy and measurable results.