From roles to reasoning: Why the future of IAM is AI-native

Two years ago, after spending nearly two decades in cybersecurity, I found myself at a crossroads – uncertain about my next move. I knew I wanted to build something big in identity and cybersecurity, I just didn't know exactly what.

As I looked at the IAM landscape, one thing became clear.

While organizations wrestled with the same challenges they'd faced for decades - rubber-stamped access requests, complex application integrations, and entitlement models no one truly understood - a question started to emerge:

Could AI finally address what 20 years of traditional IAM innovation couldn't?

At the recent Gartner Identity & Access Management Summit 2025, I had the opportunity to share insights on why AI-native IAM represents more than just another technology cycle. I believe it's a complete paradigm shift that will fundamentally reconstruct how organizations approach identity governance and access management.

The identity crisis: Innovation stood still

The uncomfortable truth about the IAM industry is that it experienced virtually no meaningful innovation for two decades. While other areas of cybersecurity evolved rapidly, identity governance platforms remained trapped in the same patterns: hard-coded policies, manual system integrations, predefined rules, and workflows that attempted to predict the unpredictable.

The consequences of this stagnation are severe. According to recent research, around 80% of data breaches now involve compromised or abused privileged credentials. The Identity Theft Resource Center's 2024 report revealed that four of the five largest data breaches (including incidents at Ticketmaster, AT&T, and Change Healthcare) could have been prevented with better IAM practices.

The problem wasn't a lack of trying. It was that traditional approaches couldn't scale to meet the complexity of modern enterprise environments.

4 fundamental problems of legacy IAM

Understanding why AI represents such a transformative opportunity requires examining the core challenges that have plagued identity governance:

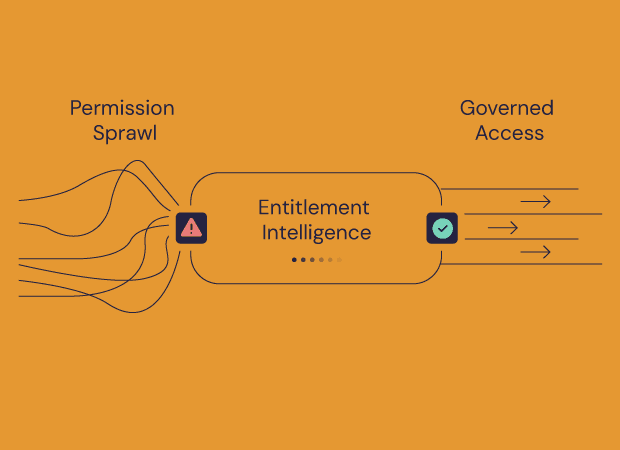

1. Entitlement models: The complexity nobody can decode

What can a specific employee do in your ERP system? The honest answer in most organizations is: nobody knows - including the employee themselves.

Entitlement models vary wildly across applications. Saying someone is an "admin" means entirely different things depending on context. An admin in your bank account can't wire more than $5,000 without a second signatory. An admin in Microsoft Entra can create new users and email addresses. These roles share a name but have completely different implications for risk and access.

When someone requests new permissions, there are typically dozens - sometimes hundreds - of ways to grant that access:

Add the person to an existing group with those permissions (they get additional unrelated permissions)

Grant the permission to a group they already belong to (everyone in that group gets the new permission)

Create a custom role with the specific permissions needed

Each option has different security implications. Realistically, no human can evaluate all possibilities against organizational policies, compliance requirements, and least-privilege principles.

As a result, teams default to the simplest option: giving users the same access as their teammates, regardless of whether it's appropriate.

2. Decision making: The rubber-stamp reality

Here's a statistic that should concern every security leader: 99.9% of access requests are approved as submitted. This isn't because all requests are appropriate - it's because reviewers lack the context, time, and tools to make informed decisions.

Access review fatigue is real.

When a manager sees a request for access to an expense reporting system, they approve it because it seems reasonable.

They don't consider:

Whether a least-privileged alternative exists

If the user actually needs permanent access or if just-in-time access would suffice

What other permissions this person already has across the environment

Whether this access aligns with the user's actual job responsibilities

The result is continuous privilege creep, where users accumulate unnecessary access over time, creating expanding attack surfaces.

3. Integrations: The never-ending service business

Traditional identity governance platforms marketed themselves as comprehensive solutions, but the reality was different. Based on insights I heard at this year’s Gartner summit, organizations routinely report application onboarding queues of 600+ applications with 8+ year projected timelines.

Why? Because each integration requires extensive manual work:

Extracting identity data from the target system

Understanding the application's unique entitlement model

Normalizing that data against your IGA platform

Building and testing provisioning workflows

Most of this work happens through professional services engagements, turning IGA into what it really is: a service business rather than a software solution. The typical enterprise has less than 50% of applications properly onboarded, and most aren't happy with the results even for those that are integrated.

4. Workflows: Predicting the unpredictable

Shirley requests access to a resource. Her manager Jake needs to approve it. But Jake is on vacation, and the backup approver, Timmy, left the company a year ago.

The request sits in limbo.

Static workflows attempt to codify every possible scenario in advance. But real-world situations don't follow scripts. People change roles, teams reorganize, approval hierarchies shift, and exceptions become the norm.

The classic 80/20 problem means organizations spend enormous effort trying to handle edge cases that never quite work properly.

Why 2023 was the turning point

In early 2023, ChatGPT was capturing global attention, but the technology was still in its early days—intriguing but limited in real-world impact. However, conversations with AI researchers from Berkeley, University of Chicago, MIT, and the Weizmann Institute revealed a consistent insight: large language models would become very good at many things, but the real opportunity lay in making them excellent at specific, complex domains.

This represented the critical insight that led us to found Opti. The identity space needed more than AI features bolted onto existing platforms. It required AI-native architecture, where specialized models trained on IAM-specific problems could understand and reason about identity at a level no human team could achieve at scale.

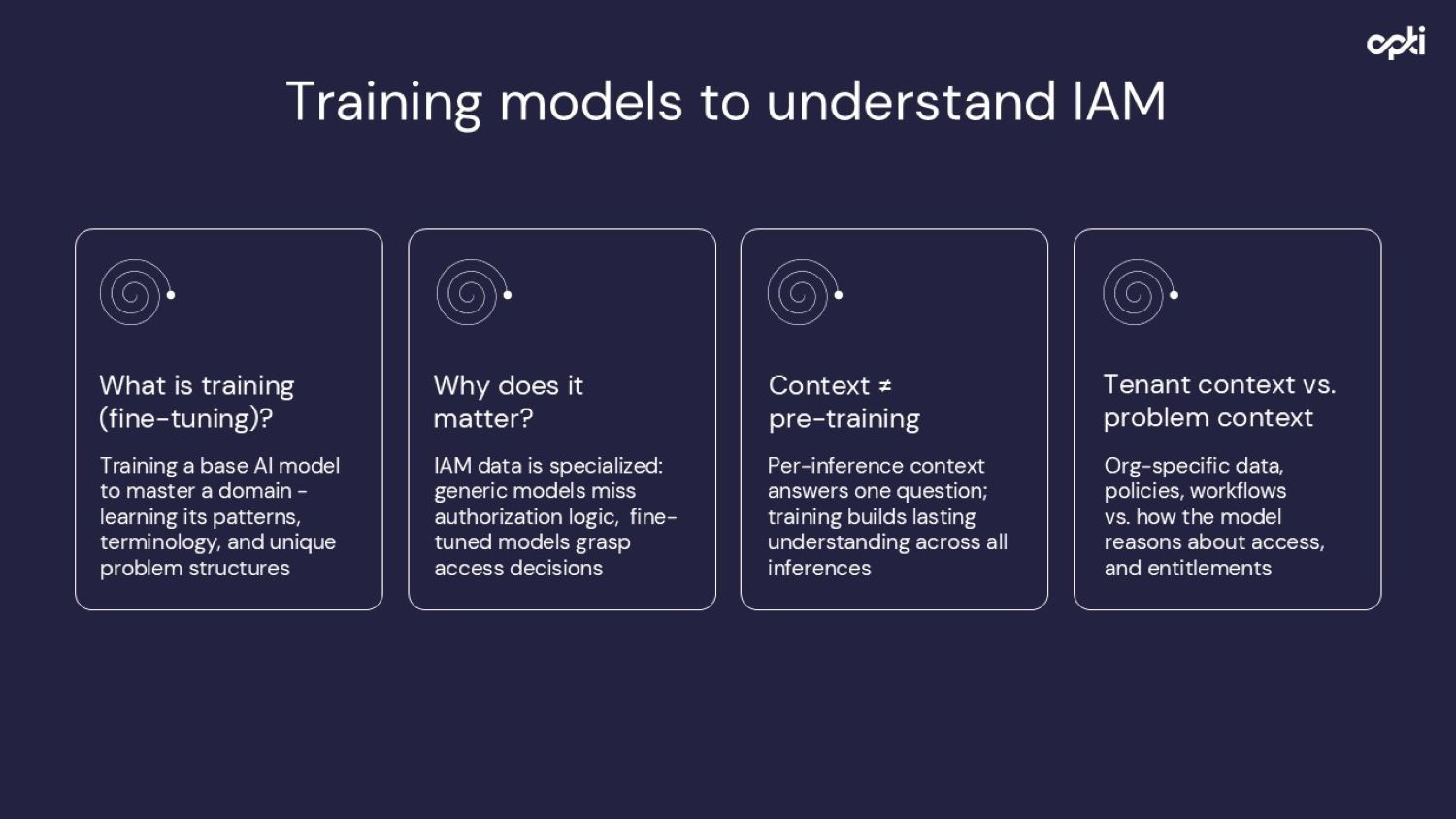

How AI model training changes everything

Understanding the difference between AI-enabled and AI-native IAM requires grasping what model training really accomplishes.

Consider this analogy:

If you asked ChatGPT to create a menu and recipes for a Michelin-star restaurant, it would likely fail. It simply wasn't trained on enough high-quality data from that specific domain. But ask it to create a menu for a diner, and it would excel, as diners are well-represented in its training data.

The same principle applies to IAM. Generic AI models can't reliably answer questions about the nuances of entitlements in mid-tier enterprise applications.

They weren't trained on that data. But a model specifically trained on hundreds of applications' authorization schemes develops genuine understanding of how entitlement models work, enabling it to reason about new applications it has never seen.

The architecture of AI-native IAM

At Opti, our approach centers on four specialized LLMs, each trained to solve one of the fundamental problems outlined earlier:

Entitlements LLM

This model understands authorization data across diverse applications:

Defines entitlements for users, roles, and systems

Normalizes data across different authorization models (RBAC, ABAC, etc.)

Facilitates intelligent decisions for granting and revoking access

Detects over-privilege and validates policy compliance

All data is normalized into a graph database, providing structured information the LLM can query without hallucination. The model doesn't just pattern-match; it understands relationships between identities, groups, permissions, and resources.

Risk mitigation LLM

This model brings business context to access decisions through:

Context-aware recommendations based on peer analysis and HR data

Understanding policies written in natural language rather than coded rules

Automated reasoning explaining why access should/shouldn't be granted

Confidence scores to help security teams focus on actual risky requests

Instead of rubber-stamping, reviewers receive intelligent insights: "This request is approved, but there's a least-privileged alternative that accomplishes the same goal with 60% fewer permissions."

AI-built integrations

Perhaps the most transformative model handles application onboarding:

Automatic learning of new application schemas and entitlements

Normalization of identity data across systems

Rapid scalability without relying on external services

The model was trained on hundreds of applications, learning to recognize common patterns in how applications structure authorization.

When encountering a new application, it applies this learned understanding to quickly normalize the data - reducing weeks or months of professional services work to hours or days.

Lifecycle management LLM

The fourth model enables:

Adaptive, context-aware lifecycle management

Dynamic approvals based on risk, timing, and changing conditions

Continuous validation to align access with actual usage

AI-driven orchestration that learns and adjusts

Rather than static workflows that attempt to predict every scenario, this model reasons about appropriate approvers, timing considerations, and fallback options in real-time.

The crucial distinction: context vs. training

A common question about AI in IAM is:

"Why can't you just use context instead of training models?"

The answer reveals why truly AI-native platforms differ from AI-enabled features:

Training teaches the model fundamental understanding of IAM concepts—how different authorization models work, what common entitlement patterns mean, how access should map to business roles. This understanding persists across all customer environments.

Context provides organization-specific information: who the users are, what their roles are, what access they currently have, what your policies state. This is customer tenant data that gets embedded at query time.

Training is far more expensive but dramatically more effective. It's the difference between explaining parking rules to someone who doesn't understand cars versus someone who already knows how to drive.

What makes a platform truly AI-native?

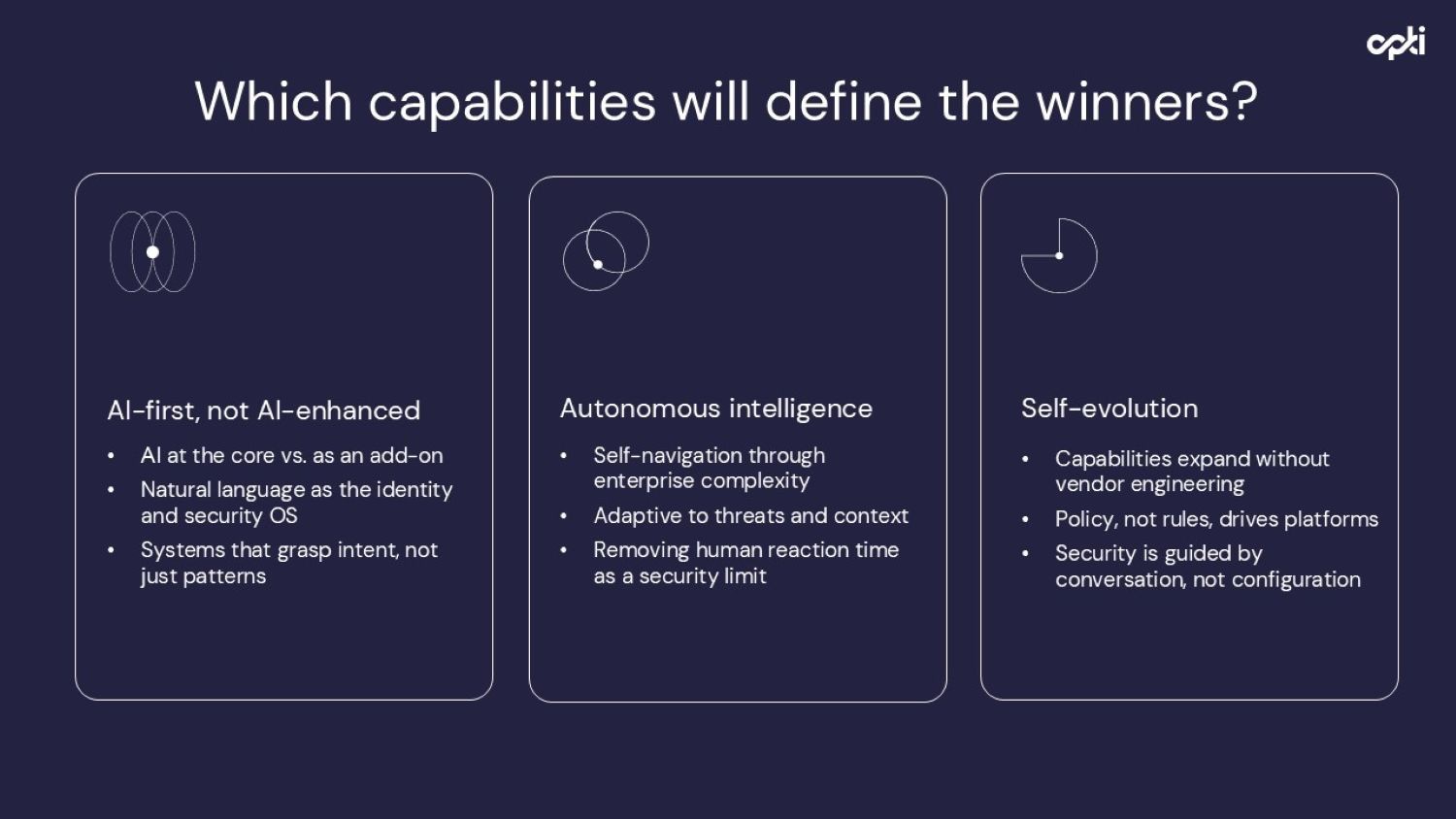

At the summit, I outlined three capabilities that will define winners in the emerging AI-native IAM landscape:

AI-first, not AI enhanced

AI must be at the core of the architecture, not bolted on. Natural language becomes the operating system for identity and security. The platform should grasp intent, not just patterns.

A telling indicator: vendors offering MCP servers (letting external AI use their APIs) aren't AI-native - they're allowing AI to use traditional systems. True AI-native platforms have AI reasoning built into their foundation.

Autonomous Intelligence

AI-native platforms should:

Navigate enterprise complexity independently

Adapt to threats and context without constant human intervention

Remove human reaction time as a security bottleneck

This doesn't mean AI runs unsupervised. It means AI handles the analysis and recommendation work that previously consumed security teams' time, allowing humans to focus on governance and strategic decisions.

3. Self-evolution

Perhaps most remarkably, AI-native platforms improve without vendor engineering. I worked with a customer who noticed new over-privileged accounts being identified without any product update. The model had evolved through continuous training on expanding datasets.

This represents a fundamental shift: policy and conversation drive the platform's capabilities rather than waiting for vendor release cycles.

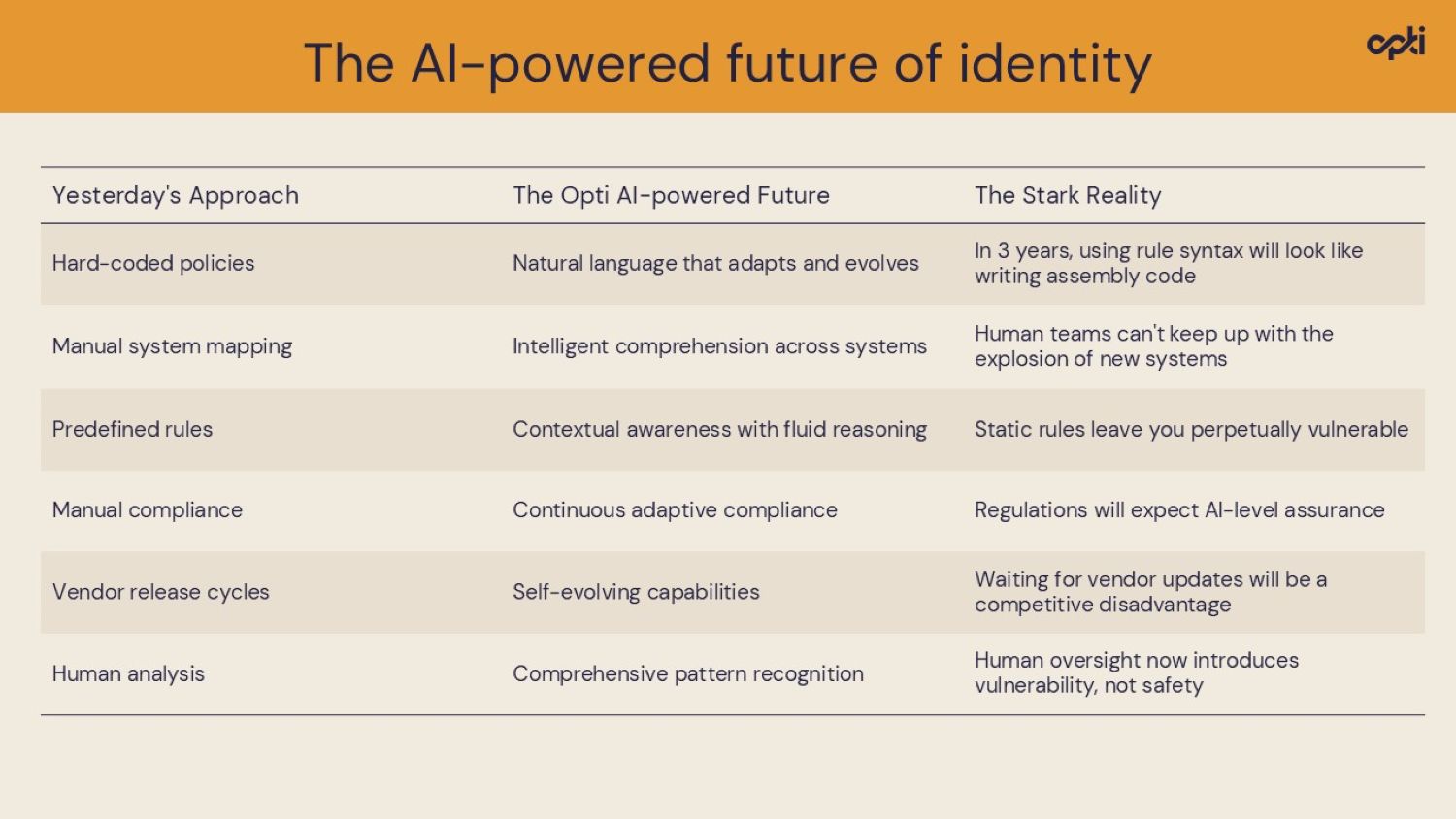

The stark reality: A paradigm shift in progress

Here’s an illustration that shows the magnitude of change ahead:

This isn't hyperbole.

Organizations continuing with traditional IAM approaches will find themselves increasingly unable to:

Scale governance across expanding application portfolios

Respond quickly enough to emerging identity threats

Meet evolving compliance requirements that assume AI-level monitoring

Compete with organizations leveraging AI-powered efficiency

Beyond the hype: substance over taglines

At the Summit, a clear theme emerged: the industry is tired of vendors claiming "AI-powered" capabilities that amount to little more than marketing.

True AI-native platforms demonstrate:

Training over prompting: Models specifically trained on IAM problems, not generic LLMs with IAM prompts

Reasoning over pattern matching: Understanding why access should be granted, not just recognizing that similar users have it

Evolution over configuration: Platforms that improve through continuous learning, not manual rules updates

How IAM leaders can navigate the future

For security and identity governance leaders, the implications are profound:.

Short term (1-2 years)

Evaluate whether your current IGA platform has genuine AI-native capabilities or just AI-enhanced features

Identify integration backlogs and assess whether AI-native platforms could dramatically accelerate application onboarding

Calculate the actual cost of rubber-stamping and privilege creep in your environment

Medium term (3-5 years)

Traditional IGA implementations will become increasingly difficult to justify given AI-native alternatives

Compliance frameworks will begin expecting AI-level continuous monitoring rather than periodic reviews

Organizations without AI-native IAM will face significant competitive disadvantages

Long term (5+ years)

Companies that aren't AI-native across their security stack will struggle to survive

Natural language will become the primary interface for security policy and governance

The distinction between AI-native and AI-enabled will determine market winners and losers

The identity crisis that defined two decades of minimal IAM innovation is ending. AI-native platforms are demonstrating that the fundamental problems - complex entitlements, rubber-stamped decisions, integration bottlenecks, and rigid workflows - can be solved through specialized AI models trained specifically for IAM.

This isn't just another technology cycle. It's a paradigm shift that will completely reconstruct how organizations think about identity governance and access management. The question isn't whether this transformation will happen—recent breaches and the emergence of AI-native capabilities make it inevitable.

The question is whether your organization will lead this transformation or be forced to catch up as the industry moves on.

Mille is a seasoned cyber specialist with over two decades of experience. He co-founded Indegy and served as CTO, steering its technology roadmap until acquisition by Tenable, where he became VP of OT Security Products. Today, he is Co-Founder & CPO at Opti, shaping its identity, access, and entitlement innovations, grounded in deep technical and threat-centric expertise.